Eric Wilson CPF introduces a new measurement of probabilistic forecast performance that measures error, range and probability all in one formula. It goes beyond grading the forecaster, instead providing a valuable snapshot of a forecast’s accuracy, reliability and usefulness – in a way that is significantly easier to perform than existing methods.

Editor’s comment: This formula may be the first to holistically measure forecast error, range, and probability, allowing you to compare models, compare performance, completely measure the forecaster, and identify outliers. Think MAPE, MPE and FVA but for range forecasts, and providing more insight. This easy to use formula could be a game changer in forecast performance metrics. Eric welcomes feedback directly or via the comments section below.

A New, Improved Approach to MAPE

This article introduces a new theoretical approach I am considering for measuring probabilistic or range forecasts. Measures of forecast performance have already been developed over the past decades with TPE, Brier Score, and others proving to be effective. The problem that I encountered was that, whilst some evaluate accuracy and others evaluated performance of the distribution, few looked at both on the same scale in a way that was easy to use. To overcome this, I have developed a single scoring function for probabilistic and range forecasts that will allow a forecaster to measure their forecasts consistently and easily. This includes even judgmental or empirical range forecasts.

Why We Predict Ranges Instead Of An Exact Number

Probabilistic and range forecasts are forms of predictive probability distributions of future variability and have enjoyed increased popularity in recent years. What we’re talking about is the difference between trying to precisely predict exact sales in the future and predicting a range of sales during that period, or the expected variability. It is also the difference between being accused of always being wrong to proudly forecasting with 100% accuracy. For more insight into this see my previous article entitled Stop Saying Forecast Are Always Wrong.

Just because you are accurate does not mean you are precise, however, or that you couldn’t do better, and it does not mean all probabilistic forecasts are created equal. With the proliferation of these probabilistic models and range forecasts arises the need for tools to evaluate the appropriateness of models and forecasts.

Range Forecasts Give Us More Information

A point forecast has only one piece of information and you only need to measure that point against the actual outcome which is generally expressed in some type of percentage error such as MAPE. The beauty of range forecasts is you are providing more information to make better decisions. Instead of one piece of information you have possibly three pieces of information, all of which are helpful. They are:

1) The upper and lower range or limit

2) The size of the total range or amount of variability

3) The probability of that outcome. To appropriately evaluate this, you should look and measure all three components and look at accuracy and precision and reliability.

So, when we say that we will sell between 50 and 150 units and are 90% confident, how do we know if we did well? A potential way I am proposing is to use a type of a scoring rule (which, for the purposes of this article, I am referring to as a (E)score. Conceptually, scoring rules can be thought of as error measures for range or probability forecasts. This score is not an error measurement but helps measure the relative error and reliability of probabilistic forecasts that contain all three components.

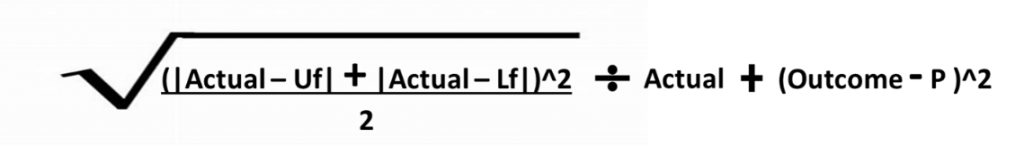

The scoring rule I have developed simply takes the square root of the sum of mean squared errors using the upper and lower limit numbers as the forecast, divided by the actual plus a scoring function for probabilistic forecasts.

(E)score = RMSE / Actual + BSf

Or

Where:

Uf=upper limit

Lf=lower limit

Outcome = if in or out of range (1 or 0)

P = probability assigned to the range

Ex. Let us assume that we have historical information that shows the probability of falling within the 50 units range +/- for each period (this could also be empirical data). We create our forecast with a wide range estimating actuals to fall somewhere between 50 and 150 units. Being a larger range, we are fairly confident and give it a 90% probability of falling within that range. In this example actuals come in exactly between the range at 100. For this forecast our (E)score= .72.

Sqrt((|100-150|+|100-50|)^2)/2+(1-.9)^2=.72

Dissecting The Components Of The (E)Score Formula

Step 1: Breaking this down to its components begins with first determining the relative error. What is done is to measure the Mean Squared Error for the sum of the deviation of the actual to the upper limit and to the lower limit divided by 2 (total number of observations 1 upper and 1 lower).

((|actual – upper limit|+|actual-lower limit|)^2/2=MSE

((|100 – 150|+|100-50|)^2)/2=5000

One of the things that stands out here is that no matter the actual value, the numerator would be the same for actuals that fall within the range. So that if our range is 150 upper limit to 50 lower limit, no matter if the actual was 125 (absolute value of 125 – 150 + absolute value of 125 – 50 = 100) or if actual was 75 (absolute value of 75 – 150 + absolute value of 75 – 50 = 100), both are the same result of 100. This is correct since the forecast is a range and consists of all possible numbers within that range and would be equal deviation from the actual. This also rewards precision and smaller ranges. Conversely, it penalizes larger ranges.

Step 2: The next step is taking the square root of this mean squared error and then dividing this by the actual. The square root brings back “units” to their original scale and allows you to divide by the actual. What you end up with a comparison of the RMSE to the observed variation in measurements of the actual and may be represented as a percentage where the smaller error, the closer the fit is to the actual.

Sqrt(MSE)/actual=Uncertainty Error (E)

Sqrt(5000)/100=71%

Step 3: For the reliability of the of the range forecast we combine this with a probabilistic scoring function. One way to do it is to compare the forecast probabilities with outcomes on a forecast-by-forecast basis. In other words, subtract the outcome (coded as 1 or 0) from the assigned forecast probability and square the result.

Here we are not looking at the precision of the forecast but measuring the skill of binary probabilistic forecasts and if the actual occurred or not within the stated range. If the actual result falls between the upper and lower limit, the outcome is treated as true and given the value of 1. If the actual falls outside of the range it is given a 0.

For example, if our upper limit was 150 and lower limit 50 and we gave this a 90% probability of occurring within that range and the actual was 100, then the statement is true. This would give a value of 1 minus the probability of 90% then squared which equals .01. If the actual had fallen anywhere outside the predicted range, our score would be much higher at .81 (value of 0 minus the probability of 90% then squared). Given it answers a binary probabilistic question (in or out of range) it does not give value to the size of the range or how far you may be out of range – only a score from 0 to 1. This is the reason for the uncertainty error and size and range precision, and what makes this new scoring function unique.

(Outcome Result – Probability Assigned)^2=Goodness of Fit (score)

(1-.9)^2=.01

It should also be noted that if you had given your forecast the same range (150 to 50) with only 10% probability and it did fall outside the range (if the actual was 200 for example) you would get the same score of .01 (value of 0 minus the probability of 10% then squared). Following the logic, it is understandable. What you are saying with the statement with 10% probability is that most likely the actual result will not fall within the range. The inverse of your statement is there is a 90% probability the actual will be anywhere outside of your range and your most likely range is all other numbers. So, if the actual is 200 or 20,000 you are more correct. While this is important, once again it is only half of the equation and why we look not just at the goodness of fit and reliability but put this together with measuring how wide of a range and how far from that range you are to get a complete picture.

Step 4: The final step is simply adding these two parts together to end up with a single score between zero and an unlimited upside.

Uncertainty Error (E) + Goodness of Fit (score) =(E)score

.71+.01=.72

The lower the score is for a set of predictions, the better the predictions are calibrated. A completely accurate forecast would have a (E)score of zero, and one that was completely wrong would have a (E)score only limited to 1 plus the forecast error. So, if you had forecasted exactly 100 units with no range up or down and forecasted this at a 100% probability of occurring, in our example with the actual of 100, you would be absolutely perfect and have a (E)score of zero. Your range in our equation is 100 to 100 making your numerator zero and for the reliability you have the equation of value of 1 being true minus 1 for 100% probability which also equals zero.

Sqrt((|100-100|+|100-100|)^2)/2+(1-1)^2=0

Forecasts are generally surrounded by uncertainty and being able to quantify this uncertainty is key to good decision making. For this reason, probability and range forecasts help provide a more complete picture so that better decisions may be made from predictions. With this we still have the need to understand and measure those components and metrics like the (E)score may help not to grade the forecaster but communicate the accuracy, reliability and usefulness of those forecasts.